Experiential Design - Task 1: Trending Experience

24.04.2024 - 19.05.2024 / Week 1 - Week 4

Lim Rui Ying / 0358986Experiential Design / Bachelor of Design (Hons) in Creative Media

Task 1: Trending Experience

CONTENT

Reflection

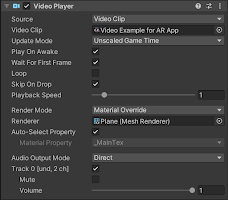

On the Plane, add a Video Player component, and drag the video file to the Video Clip column. We can adjust settings like looping, playback speed, and volume within the Video Player component.

We should uncheck the Track Device Pose to ensure the play/pause functions of the video player work well.

Video 5: Outcome of Play/Pause functions using buttons

Animate/Stop Animate buttons

Add the script component to the buttons

WEEK 10 | UI Element

LECTURES

WEEK 1 | Module Briefing

We were briefed about this module about the tasks and submissions. Mr Razif

showed us past students' work to give an idea of the outcome of an AR

application and the areas of improvement in how we can execute this project.

Introduction to XR

|

| Extended Reality (XR) |

Types of AR Experiences

- Mobile (*mind that users mostly use one hand to interact with the virtual objects / use hand gestures)

- Projection - 3D projection mapping

- Head Mounted Display (HMD)

AR try-on: AR Animals & The Patung Project

We used our mobile phones to view the 3D animals and "patungs" from The

Patung Project through augmented reality.

AR 3D cat and The Patung Project

Designing AR

- Marker-based: Requires a marker such as an image to activate the virtual object

- Markerless: Scan the surrounding environment to activate the virtual content

Design Thinking & Design Process

WEEK 3 | Designing Experience

Terminology

- XD (Experience Design)

- UX (User Experience)

- UI (User Interface)

- CX (Customer Experience)

- BX (Brand Experience)

- IxD (Interaction Design)

- SD (Service Design)

- IA (Information Architecture)

Journey Map

- To map user’s journey when they perform a certain task

- Have many touchpoints

- Each touchpoint may have positive or negative feelings (gain point & pain point)

Empathy Map

- Create a shared understanding

- Aid in decision making

- Components: Says, Thinks, Feels, Does

- Capture the way users interact, think and feel about the product

Browse through the

Nelson Norman Group website to get more information and resources on UX design.

INSTRUCTIONS

EXERCISES

WEEK 1 | Exercise 1

Imagine the scenario in either of the two places. What would the AR

experience be and what extended visualisation can be useful? What do you

want the user to feel?

Scenario: Sports Retail Store

The scenario I chose is Decathlon, a large sports retail store up to four

or five levels. Every time I go shopping there, I face difficulty in

finding the things that I want to buy. An in-store navigation app

comprised of augmented reality technology would be helpful for me. By

using the app, customers are able to search for the product they intended

to find or purchase and get to it with the AR directions provided in the

app. Upon finding the product, an AR card pops up showing the product

image, name and available stock.

Main features:

- Search products by categories, names

- Navigate customers to a certain product by showing AR

directions

- Show the stock of a product

Visualisation:

Fig. 1.1 Visualisation of Decathlon navigation app (from left to right:

1-3)

- After selecting the desired product, a layout plan of the store will appear, indicating the customer's current location and the route to find the product.

- The live view navigation utilises AR to indicate the directions.

- Once the product is located, an AR card pops up beside it, displaying its name and available stock.

WEEK 3 | Group Activity: Journey Map

We were assigned to create a journey map of a place, listing out the tasks

that will be performed at the place, along with gain points (positive

aspects), pain points (negative aspects) and possible solutions with the

incorporation of an AR mobile app. We worked in a group of six and chose

Universal Studio of Singapore (theme park) for user mapping.

|

| Fig. 1.2 Theme Park Journey Map - Universal Studio, Singapore |

Feedback given by Mr Razif:

- AR Navigation - Offer a selection of designated journeys for visitors to choose from, limiting them to maybe three options to alleviate congestion.

- Ride Game - Relying solely on express tickets might not be ideal, as an excessive number of visitors purchasing them could lead to long queues.

- Live Shows - Restrict the number of pre-booked seats and allow for both online and physical bookings simultaneously to prevent situations where visitors are unable to book any seats.

- Eatery - Same applies to online reservations for seating and food pre-orders, must ensure that walk-in customers have access to seating.

TASK 1: Trending Experience

Trending Experience 1: Marker-based AR

WEEK 3 | Image Target

This week, we started to learn using the Unity software. We first set up

Unity and imported the Vuforia Engine Package. Mr Razif explained the

panels and their usage on Unity:

- Scene: space for designing game objects

- Game: to preview the outcome

- Project: contain all files

- Assets: the things you used in a project

- Main camera: the view of the camera

- Inspector: similar to properties, able to edit properties of a game object

- Hierarchy: all things in the hierarchy are called game objects

Next, we generated a license key and set up the Vuforia Engine Database

for creating the marker-based AR experience (Image Target). It would be

best for the Target to reach a rating of 4 to ensure it can be recognised

properly.

|

| Fig. 2.1 Setting up database |

Image Target

We first learned to add a 3D cube to the Image Target. The Image Target

serves as a marker to activate the 3D Object. It is important to note

that the 3D Object needs to be placed as a child of the Image

Target so it can appear correctly on the image. The 3D cube can be

transformed in terms of its scale, position and rotation.

|

| Fig. 2.2 Adding a 3D cube to the Image Target |

Video 1: Outcome of the 3D cube on the Image Target

Next, we learned to incorporate a video on the Image Target by adding a

Plane to it.

|

| Fig. 2.3 Adding a Plane to the Image Target |

On the Plane, add a Video Player component, and drag the video file to the Video Clip column. We can adjust settings like looping, playback speed, and volume within the Video Player component.

Video 2: Outcome of the Video Player on the Image Target

Using the same approach, I generated another Image Target featuring

Spirited Away, a Japanese animated film by Hayao Miyazaki. I

incorporated a 3D model and video of the characters from the movie.

Video 3: Outcome of Image Target - Spirited Away

Sources:

Video: https://youtu.be/LUU3LIZb0aI

3D model: https://skfb.ly/ozyIu

WEEK 4 | User Controls, UI (Buttons) & Build Settings

This week our tutorials covered the following:

- Add functions to the game object

- Create canvas and buttons, and add button functions

- Create animations on a game object

- Build settings and project settings

Add functions to a game object

We learned to add play and pause functions to the video player when the

image target is found/lost.

In the Inspector panel for the Image Target, add an event to the list

for when the target is found. Set the video player as the object

and choose the VideoPlayer.Play function. For

when the target is lost, follow the same steps but select the

VideoPlayer.Pause function instead.

|

| Fig. 3.1 Adding functions to the video player when the target is lost/found |

When we scanned the Image Target, the video appeared on it and played

automatically. When we move our camera away from the Image Target, the

video will pause.

Another thing to note is the Track Device Pose of the AR

Camera.

- Checked: If the tracker is lost, the object will remain in the same position

- Unchecked: If the tracker is lost, the object will also disappear

|

| Fig. 3.2 Track Device Pose |

We should uncheck the Track Device Pose to ensure the play/pause functions of the video player work well.

Video 4: Outcome of adding Play/Pause functions to the video

player

Create canvas and buttons, and add button functions

Play/pause buttons

The play/pause functions can also be controlled using buttons. We created

a canvas and two buttons (Play and Pause) on it.

|

| Fig. 3.3 Creating a canvas and play/pause buttons |

First, select the button you want to configure. In the Button component,

apply the following settings to assign the play and pause functions to

each button:

- Object: select Plane (Video Player)

- On Click function: select VideoPlayer.Play (for Play button), VideoPlayer.Pause (for Pause button)

|

| Fig. 3.4 Adding play function to the button |

Video 5: Outcome of Play/Pause functions using buttons

Show/hide buttons

Next, apply the GameObject.SetActive function to show or hide

the Cube:

- SetActive checked: This means that SetActive is true. Clicking the Show button will display the Cube.

- SetActive unchecked: This means that SetActive is false. Clicking the Hide button will hide the Cube.

Select the button you want to configure. Then, apply the settings to set

the show and hide functions to the buttons:

- Object: select Cube

- On Click function: set GameObject.SetActive checked (for Show button), unchecked (for Hide button)

|

| Fig. 3.5 Adding show function to the button |

Video 6: Outcome of Show/Hide functions

Create animations on a game object

Next, we learned how to create basic animations for the Cube:

1. Create a new folder named "Animations".

2. In this folder, create an animation named "Cube_idle".

3. Open the Animation panel and record the animation of the Cube

moving upwards and downwards.

4. For a static animation of the Cube, create another animation

named "Cube_stop" without recording any movements.

|

| Fig. 3.6 Recording animation of the Cube |

To activate the animation of the Cube, we first have to set the default

state of the Cube to static and add buttons to control the animations.

|

| Fig. 3.7 Set "Cube_stop" (static animation) as the Layer Default State |

Animate/Stop Animate buttons

To set the function for the Animate button to play the animation of the

Cube:

- Select the Animate button

- Object: select Cube

- On Click function: choose Animator.Play

- In the field provided, type "Cube_idle"

|

| Fig. 3.8 Setting the play animation function to the button |

For the Stop Animate button:

- Select the Stop Animate button

- Object: select Cube

- On Click function: choose Animator.Play

- In the field provided, type "Cube_stop"

|

| Fig. 3.9 Setting the stop animation function to the button |

Video 7: Outcome of Animate/Stop Animate functions on the Cube

Build settings

Since I am using a MacBook and iPhone, so I select iOS under the Build

Settings.

|

| Fig. 3.10 Build Settings |

Project settings

For the project settings, we should fill in the basic information such

as the product name and app version. While the icon and splash image

are optional.

Under Resolution and Prentation, make sure "Render Over Native UI"

is checked.

While under Other Settings, make the following adjustments:

- Uncheck Metal API Validation

- Camera Usage Description: type "AR Camera"

- Target minimum iOS Version: 15.0.

- Architecture: choose ARM64

Run in Xcode

After completing the build settings and project settings, I tried to

run the app in Xcode. However, I encountered some issues with the Bundle

Identifier and Sandbox error.

|

| Fig. 3.12 Run in Xcode |

I managed to run it on my phone once, but there were some issues: the

buttons didn't appear on the screen, and there was no sound for the

video. I could only roughly tap on the approximate positions of the

buttons for them to function.

Video 8: Trying out the app on mobile phone

Trending Experience 2: Markerless AR

WEEK 6 | Ground Plane

This week, we created a markerless AR experience by detecting the a flat

surface to spawn a 3D object on the ground.

After setting up the Vuforia Package in the Unity Project, add the

following object to the Hierarchy:

- Plane Finder: Enables detection of surface

- Duplicate Stage checked: Allows spawning multiple objects

- Ground Plane stage: Allows placing objects on the ground

- The object to spawn in the real world has to be a child of the Ground Plane Stage

First, we added a cube as the child of the Ground Plane Stage. Then, we

used a ground plane simulator to simulate the real ground and tested

whether the cube could be spawned on the ground.

|

| Fig. 4.1 Ground plane simulator |

Video 9: Test in Unity

We are required to create another markerless AR experience using virtual

furniture to simulate the IKEA app, which places virtual furniture in real

environments.

I successfully built the app and was able to place the sofa on the ground. However, I did not notice the orientation of the sofa is facing backwards. Thus, when I place the virtual sofa on the ground, it displayed the back view.

|

| Fig. 4.2 Adding a 3D sofa to the scene |

|

| Fig. 4.3 Building the app in Xcode |

I successfully built the app and was able to place the sofa on the ground. However, I did not notice the orientation of the sofa is facing backwards. Thus, when I place the virtual sofa on the ground, it displayed the back view.

Video 10: Markerless AR experience - Virtual Furniture

Source:

Sofa 3D model

WEEK 9 | Scene Navigation

ProBuilder

- To create a 3D space or room for a portfolio or gallery showcase.

- Install ProBuilder: Window - Package Manager - Unity Registry [Search: ProBuilder] - Install

- Open ProBuilder Window: Tools - ProBuilder - ProBuilder Window

I created an open space for a furniture showcase by placing two walls

with a flat surface and positioning a sofa in the centre.

|

| Fig. 5.1 Building the space using ProBuilder |

Create scenes

Next, we renamed the current scene that contains the 3D space called

"ARScene" and created another two scenes "Menu" and "Exit". We created

buttons on all scenes for navigation:

- Menu scene: START and EXIT buttons

- AR scene: MENU and EXIT buttons

- Exit scene: MENU and START buttons

Fig. 5.2 Menu scene, AR scene and Exit scene

Create Scene Manager script

Before creating a script for screen navigation, ensure the scenes are

properly set up:

After that, create a C# Script file under a new folder named "Scripts"

in the "Assets" folder. Rename the script file to "MySceneManager"

immediately to ensure the class name matches the file name. In the

We learned two different ways of scripts for the navigation:

1. Individual navigation scripts for each scene

|

| Fig. 5.4 Method 1 |

2. Generic navigation script using strings

|

| Fig. 5.5 Method 2 |

Add the script component to the buttons

Next, we have to add the script to the buttons. Before that, create an

empty object name it "SceneManager", and drag it to the list under the

button. Select "MySceneManager" – "changetoscene" and type the scene name

in the text field.

|

| Fig. 5.6 Add the "SceneManager" to the button |

In the outcome, I made the 3D space smaller for a better view in the Unity preview.

Video 11: Outcome of scene navigation

WEEK 10 | UI Element

This week, we learned to import and apply custom-designed UI elements to buttons. Below are the steps:

Buttons styles:

- Change colours using colour tint

- highlight colour: mouse over

- pressed colour: when clicked/tapped

- swap instance using sprite swap to create simple animations for button

- Import the customised background for the button

REFLECTION

In Exercise 1, we described a scenario that could implement augmented

reality (AR) to enhance the experience. With navigating in the Decathlon

store as my chosen scenario, I did some research on AR indoor navigation

and created mockups for the store. This helped me understand the

applications of AR technology in daily life. Additionally, the group

activity of mapping out a journey enabled us to identify user pain points

and consider how AR integration could address them. It is important to

recognise user challenges and examine whether AR solutions could

effectively enhance their experiences.

I found using Unity software challenging at first as I am new to AR. It

took me some time to familiarise myself with the space and tools within

Unity. Through this process, I gained insight into how existing AR

experiences are created. I learned a lot from this task and believe that

it sets a solid foundation for developing our AR project. I am looking

forward to applying the basic techniques I've learned to create my own AR

app.

Comments

Post a Comment